Once upon a time, everything was so simple. The network card was slow and had only one queue. When packets arrives, the network card copies packets through DMA and sends an interrupt, and the Linux kernel harvests those packets and completes interrupt processing. As the network cards became faster, the interrupt based model may cause IRQ storm due to the massive incoming packets. This will consume the most of CPU power and freeze the system. To solve this problem, NAPI (interrupt + polling) was proposed. When the kernel receives an interrupt from the network card, it starts to poll the device and harvest packets in the queue as fast as possible. NAPI works nicely with the 1 Gbps network card which is common nowadays. However, it comes to the 10 Gbps, 20 Gbps, or even 40 Gbps network cards, NAPI may not be sufficient. Those cards would demand much faster CPU if we still use one CPU and one queue to receive packets. Fortunately, multi-core CPUs are popular now, so why not process packets in parallel?

# ethtool -X eth0 equal 3

Or, if you find a magic hash key that is particularly useful:

# ethtool -X eth0 hkey <magic hash key>

For the low latency networking, besides the filter, the CPU affinity

is also important. The optimal setting is to allocate one CPU to

dedicate to one queue. First, find out the IRQ number by check /proc/interrupt, and then set the CPU bitmask to /proc/irq/<IRQ_NUMBER>/smp_affinity to allocate the dedicated CPU. To avoid the setting being overwritten, the daemon, irqbalance, has to be disabled. Please note that according to the kernel document,

hyperthreading has shown no benefit for interrupt handling, so it's

better to match the number of queues with the number of the physical CPU

cores.

When the driver receives a packet, it wraps the packet in a socket buffer (sk_buff) which contains a u32 hash value for the packet. The hash is so called Layer 4 hash (l4 hash) which is based on the source IP, the source port, the destination IP, and the destination port, and it is calculated by either the network card or __skb_set_sw_hash(). Since every packet of the same TCP/UDP connection (flow) shares the same hash, it's reasonable to process them with the same CPU.

The basic idea of RPS is to send the packets of the same flow to the specific CPUs according to the per-queue rps_map. Here is the struct of rps_map:

# echo 7 > /sys/class/net/eth0/queues/rx-0/rps_cpus

This will guarantee the packets received from queue 0 in eth0 go to CPU 1~3.

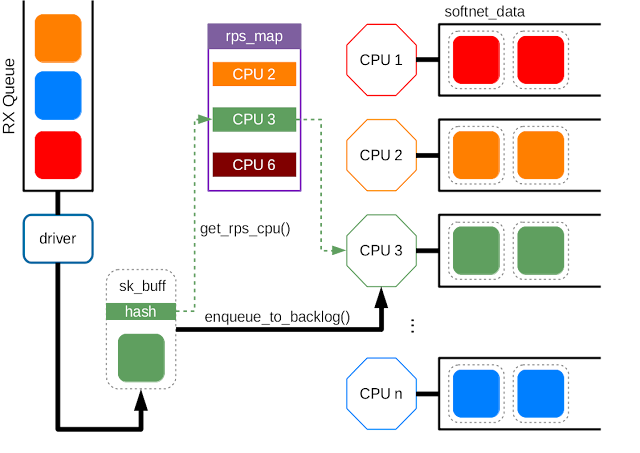

After the driver wrap a packet in sk_buff, it will reach either netif_rx_internal() or netif_receive_skb_internal(), and then get_rps_cpu() will be invoked to map the hash to an entry in rps_map, i.e. the CPU id. After getting the CPU id, enqueue_to_backlog() puts the sk_buff to the specific CPU queue for the further processing. The queues for each CPU are allocated in the per-cpu variable, softnet_data.

The benefit of using RPS is to share the load of packet processing among the CPUs. However, it may be unnecessary if RSS is available since the network card already sort the packets for each queue/CPU. However, RPS could still be useful if there are more CPUs than the queues. In that case, each queue can be associated with more than one CPU and distribute the packets among them.

Instead of the per-queue hash-to-CPU map, RFS maintains a global flow-to-CPU table, rps_sock_flow_table:

The entry is partitioned into flow id and CPU id by rps_cpu_mask. The low bits are for CPU id while the high bits are for the flow id. When the application operates on the socket (inet_recvmsg(), inet_sendmsg(), inet_sendpage(), tcp_splice_read()), sock_rps_record_flow() will be called to update the sock flow table.

When a packet comes, get_rps_cpu() will be called to decide which CPU queue to use.

Here is how get_rps_cpu() decides the CPU for the packet:

The size of the sock flow table can be adjusted through /proc/sys/net/core/rps_sock_flow_entries. For example, if we want to set the table size to 32768:

# echo 32768 > /proc/sys/net/core/rps_sock_flow_entries

Although the sock flow table improves the application locality, it also raise a problem. When the scheduler migrates the application to a new CPU, the remaining packets in the old CPU queue become outstanding, and the application may get the out of order packets. To solve the problem, RFS uses the per-queue rps_dev_flow_table to track the outstanding packets.

Here is the struct of rps_dev_flow_table:

The size of the per-queue flow table (rps_dev_flow_table) can be configured through the sysfs interface: /sys/class/net/<dev>/queues/rx-<n>/rps_flow_cnt.

It's recommended to set rps_flow_cnt to (rps_sock_flow_entries / N) while N is the number of RX queues (assume the flows are distributed evenly among the queues).

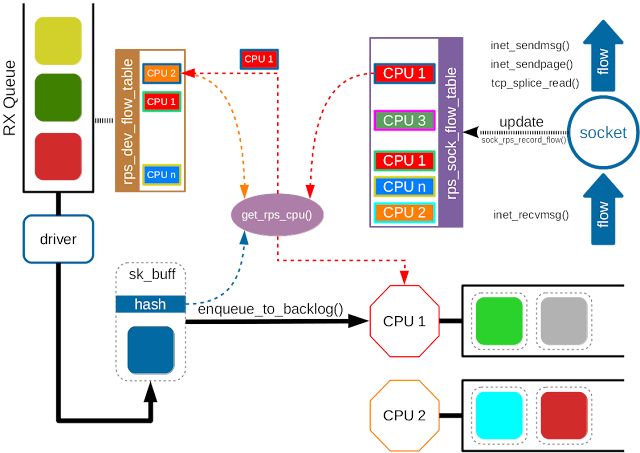

Fig. 3 illustrates how RFS works. The kernel gets the blue packet which belongs to the blue flow. The per-queue flow table directs the blue packet to CPU 2 (old CPU) while the socket updates the sock flow table to use CPU 1, the new CPU. get_rps_cpu() checks both table and finds the migration of CPU, so it updates the per-queue flow table (assume there is no outstanding packets in CPU 2) and assigns CPU 1 for the blue packet.

# ethtool -K eth0 ntuple on

For the driver to support aRFS, it has to implement ndo_rx_flow_steer to help set_rps_cpu() to configure the hardware filter. When get_rps_cpu() decides to assign a new CPU for a flow, it calls set_rps_cpu(). set_rps_cpu() first checks if the network card supports ntuple filter. If yes, it will query a rx_cpu_rmap to find a proper RX queue for the flow. rx_cpu_rmap is a special map maintained by the driver. The map is used to look up which RX queue is suitable for the CPU. It could be the queue that is directly associated with the given CPU or a queue whose processing CPU is closet in cache locality. After getting the RX queue index, set_rps_cpu() invokes ndo_rx_flow_steer() to notify the driver to create a new filter for the given flow. ndo_rx_flow_steer() will return the filter id, and the filter id will be stored in the per-queue flow table.

Besides implementing ndo_rx_flow_steer(), the driver has to call rps_may_expire_flow() periodically to check whether the filters are still valid and remove the expired filters.

By the way, this article only covers the receiving part in the kernel document, and Transmit Packet Steering (XPS), the only multi-queue TX mechanism, was omitted on purpose. I would like to mention it in another article that is dedicated to the packet transmission (probably including qdisc in tc).

RSS: Receive Side Scaling

Receive Side Scaling (RSS) is the mechanism to process packets with multiple RX/TX queues. When the network card with RSS receives packets, it will apply a filter to packets and distribute the packets to RX queues. The filter is usually a hash function and can be configured from "ethtool -X". If you want to spread flows evenly among the first 3 queues:# ethtool -X eth0 equal 3

Or, if you find a magic hash key that is particularly useful:

# ethtool -X eth0 hkey <magic hash key>

| Fig. 1 |

RPS: Receive Packet Steering

While RSS provides the hardware queues, a software-queue mechanism called Receive Packet Steering (RPS) is implemented in Linux kernel.When the driver receives a packet, it wraps the packet in a socket buffer (sk_buff) which contains a u32 hash value for the packet. The hash is so called Layer 4 hash (l4 hash) which is based on the source IP, the source port, the destination IP, and the destination port, and it is calculated by either the network card or __skb_set_sw_hash(). Since every packet of the same TCP/UDP connection (flow) shares the same hash, it's reasonable to process them with the same CPU.

The basic idea of RPS is to send the packets of the same flow to the specific CPUs according to the per-queue rps_map. Here is the struct of rps_map:

struct rps_map {

unsigned int len;

struct rcu_head rcu;

u16 cpus[0];

};

The map changes dynamically according to the CPU bitmask to /sys/class/net/<dev>/queues/rx-<n>/rps_cpus. For example, if we want to make the queue use the first 3 CPUs in a 8 CPUs system, we first construct the bitmask, 0 0 0 0 0 1 1 1, to 0x7, and# echo 7 > /sys/class/net/eth0/queues/rx-0/rps_cpus

This will guarantee the packets received from queue 0 in eth0 go to CPU 1~3.

After the driver wrap a packet in sk_buff, it will reach either netif_rx_internal() or netif_receive_skb_internal(), and then get_rps_cpu() will be invoked to map the hash to an entry in rps_map, i.e. the CPU id. After getting the CPU id, enqueue_to_backlog() puts the sk_buff to the specific CPU queue for the further processing. The queues for each CPU are allocated in the per-cpu variable, softnet_data.

|

| Fig. 2 |

RFS: Receive Flow Steering

Although RPS distributes packets based on flows, it doesn't take the userspace applications into consideration. The application may run on CPU A while the kernel puts the packets in the queue of CPU B. Since CPU A can only use its own cache, the cached packets in CPU B become useless. Receive Flow Steering (RFS) extends RPS further for the applications.Instead of the per-queue hash-to-CPU map, RFS maintains a global flow-to-CPU table, rps_sock_flow_table:

struct rps_sock_flow_table {

u32 mask;

u32 ents[0];

};

The mask is used to map the hash value into the index of the table. Since the table size will be rounded up to the power of 2, the mask is set to table_size - 1, and it's easy to find the index a sk_buff with hash & scok_table->mask.The entry is partitioned into flow id and CPU id by rps_cpu_mask. The low bits are for CPU id while the high bits are for the flow id. When the application operates on the socket (inet_recvmsg(), inet_sendmsg(), inet_sendpage(), tcp_splice_read()), sock_rps_record_flow() will be called to update the sock flow table.

When a packet comes, get_rps_cpu() will be called to decide which CPU queue to use.

Here is how get_rps_cpu() decides the CPU for the packet:

ident = sock_flow_table->ents[hash & sock_flow_table->mask];

if ((ident ^ hash) & ~rps_cpu_mask)

goto try_rps;

next_cpu = ident & rps_cpu_mask;

get_rps_cpu()

finds the index of the entry with the flow table mask and checks if the

high bits of the hash matches the entry. If yes, it retrieves the CPU

id from the entry and assign that CPU for the packet. If the hash doesn't match any entry, it falls back to use the RPS map.The size of the sock flow table can be adjusted through /proc/sys/net/core/rps_sock_flow_entries. For example, if we want to set the table size to 32768:

# echo 32768 > /proc/sys/net/core/rps_sock_flow_entries

Although the sock flow table improves the application locality, it also raise a problem. When the scheduler migrates the application to a new CPU, the remaining packets in the old CPU queue become outstanding, and the application may get the out of order packets. To solve the problem, RFS uses the per-queue rps_dev_flow_table to track the outstanding packets.

Here is the struct of rps_dev_flow_table:

struct rps_dev_flow {

u16 cpu;

u16 filter; /* For aRFS */

unsigned int last_qtail;

};

struct rps_dev_flow_table {

unsigned int mask;

struct rcu_head rcu;

struct rps_dev_flow flows[0];

};

Similar to sock flow table, rps_dev_flow_table also use table_size - 1 as the mask while the table size also has to be rounded up to the power of 2. When a flow packet is enqueued, last_qtail is updated to the tail of the queue of the CPU. If the application is migrated to a new CPU, the sock flow table will reflect the change and get_rps_cpu() will set the new CPU for the flow. Before setting the new CPU, get_rps_cpu() checks whether the current head of the queue already passes last_qtail. If so, it means there is no more outstanding packets in the queue, and it's safe to change CPU. Otherwise, get_rps_cpu() will still use the old CPU recorded in rps_dev_flow->cpu.The size of the per-queue flow table (rps_dev_flow_table) can be configured through the sysfs interface: /sys/class/net/<dev>/queues/rx-<n>/rps_flow_cnt.

It's recommended to set rps_flow_cnt to (rps_sock_flow_entries / N) while N is the number of RX queues (assume the flows are distributed evenly among the queues).

|

| Fig. 3 |

Accelerated Receive Flow Steering

Accelerated Receive Flow Steering (aRFS) extends RFS further to the hardware filter for the RX queues. To enable aRFS, it requires a network card with the programmable ntuple filter and the driver support. To enable ntuple filter,# ethtool -K eth0 ntuple on

For the driver to support aRFS, it has to implement ndo_rx_flow_steer to help set_rps_cpu() to configure the hardware filter. When get_rps_cpu() decides to assign a new CPU for a flow, it calls set_rps_cpu(). set_rps_cpu() first checks if the network card supports ntuple filter. If yes, it will query a rx_cpu_rmap to find a proper RX queue for the flow. rx_cpu_rmap is a special map maintained by the driver. The map is used to look up which RX queue is suitable for the CPU. It could be the queue that is directly associated with the given CPU or a queue whose processing CPU is closet in cache locality. After getting the RX queue index, set_rps_cpu() invokes ndo_rx_flow_steer() to notify the driver to create a new filter for the given flow. ndo_rx_flow_steer() will return the filter id, and the filter id will be stored in the per-queue flow table.

Besides implementing ndo_rx_flow_steer(), the driver has to call rps_may_expire_flow() periodically to check whether the filters are still valid and remove the expired filters.

|

| Fig. 4 |

Conclusion

RSS, RPS, RFS, and aRFS, those mechanisms were introduced before Linux 3.0, so the most of distributions already included and enabled them. It's good to understanding them in the deep level so that we find out the best configuration for our systems.By the way, this article only covers the receiving part in the kernel document, and Transmit Packet Steering (XPS), the only multi-queue TX mechanism, was omitted on purpose. I would like to mention it in another article that is dedicated to the packet transmission (probably including qdisc in tc).

References

Scaling in the Linux Networking StackMonitoring and Tuning the Linux Networking Stack: Receiving Data

Great article, please provide the link for XPS article also.

回覆刪除I planned to write the article but it didn't happen in the end...

刪除